This is our second part of our Couchbase and CFML series that we started last week. In our first post, “Installation and Introduction to Couchbase” we talked about Couchbase Server, how to install it, and how it can help create a fast and scalable caching layer for your applications. Today we’re going to talk about setting up a Couchbase cluster and look at our first use for it: as a Hibernate secondary cache for ColdFusion ORM.

Horizontal Scalability

In our previous post we set up a very simple cluster of only one node. Let’s look at how Couchbase lets you expand your cluster horizontally as your needs increase. A cluster can have as many nodes as you want, seriously! All nodes in a cluster will be exact copies of each other in regards to their buckets and even their configuration. When you set up the first node, you will choose how much RAM you want for each node in that cluster to allocate itself. You can only add a new node to the cluster if it has enough RAM to allow for the node size specified in the cluster at setup. Therefore, the total amount of RAM in the entire cluster will be the node size times the number of nodes.

Total Cluster Ram = (NodeRam x NumNodes)

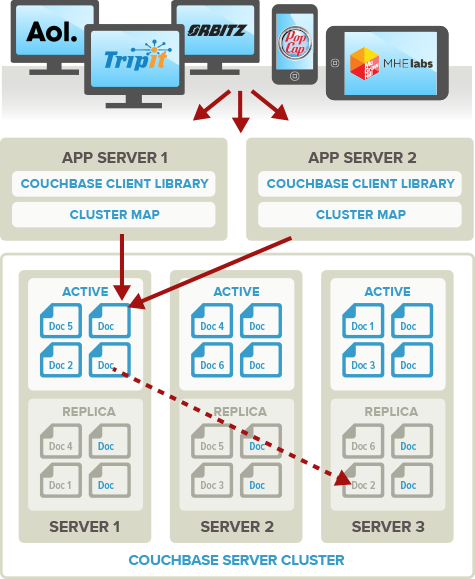

The documents you store in the cluster will be evenly spread out across all the nodes. So if you have 100 documents and 2 nodes, there will be 50 documents per node. Think of it as hard disks in RAID 0 which stripes the data evenly across the array so data reads and writes can be evenly distributed and paralleled.

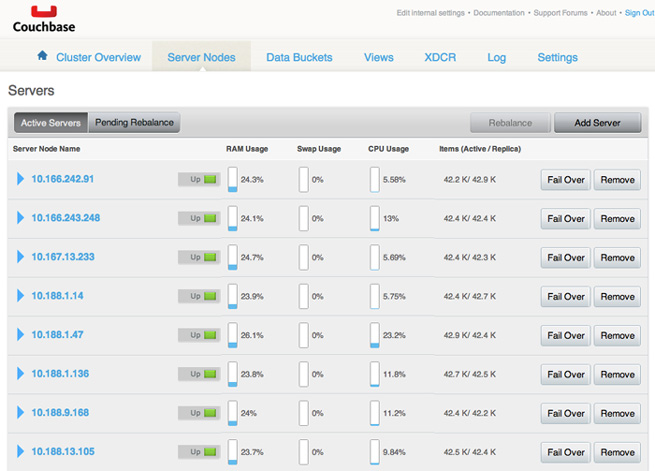

You can add a server to an existing Couchbase cluster when you complete the Couchbase installer. If you have an existing server you want to add to your cluster, log into the admin for any of the servers in your cluster and click “Add Server” under the “Server Nodes” tab. Enter the address, username, and password of the new node and you’re on your way.

Note: when you add a server node to an existing cluster, it’s assimilated by the Borg so to speak and any buckets/data on it will be wiped in favor of the data on the cluster being joined.

After adding the server, issue a rebalance on the cluster to redistribute the storage documents evenly across all current nodes.

Durability

Couchbase doesn’t stop there, it allows you have replicas of your data. If we take the example cluster above and configure it to store one replica, each node will still still act as primary storage for 50 documents, but each node will also store 50 replica documents alongside them. Now your cluster can stand to have a node go offline. Once you fail-over the bad node, the remaining node will have all 100 documents on it and it will become the new primary node for all 100 documents. This is more like RAID 5 which allows you to lose a disk and not lose any data.

It gets even better though, you can have as many replicas as you want up to the maximum of 3. This maximum number directly correlates to how many nodes you can have go down without losing any data. Once you add nodes back into the cluster, you issue a re-balance command which executes without interrupting the live nodes’ performance and redistributes all the documents evenly across the currently-available nodes again. Remember, the total number of replicas you can have is one less than the total number of nodes. That means if you only have 3 nodes, you can’t have more than 2 replicas.

Maximum number of replicas = total nodes - 1 (up to 3)

With this approach, you can use Couchbase to handle very large data sets and serve a large number of clients efficiently as the cluster can grow as it needs. Clients keep their own list of nodes in the cluster and only connect to the appropriate nodes based on the specific documents they want to access. This also removes single points of failover. Unlike a single hulking RDBMS database with your entire data set, now you can scale in a flexible way that allows you to re-adjust the cluster and handle failures, upgrades, additions without any downtime at all.

Failover

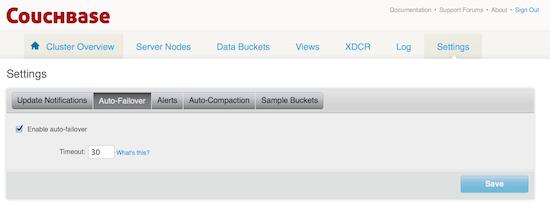

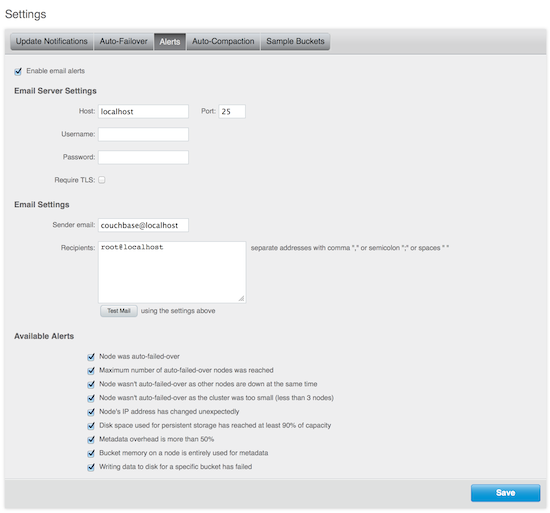

Couchbase is also equipped with automatic failover that will kick in when you’re using replicas and have at least 3 nodes. The cluster will automatically remove the bad node and shift access to the affected documents over to the replica nodes. The cluster can also send you e-mail notifications when this happens so your admin can look into the issue and manually failover nodes and issue rebalances as needed. Couchbase has been written in every possible way to always default to the safest possible operation in relation to your data including safeguards to prevent rolling outages and data loss. For instance, it won’t auto failover if there aren’t enough nodes left to keep the required number of replicas up. It also won’t remove more than one node at a time without human intervention to prevent a domino effect on all your nodes.

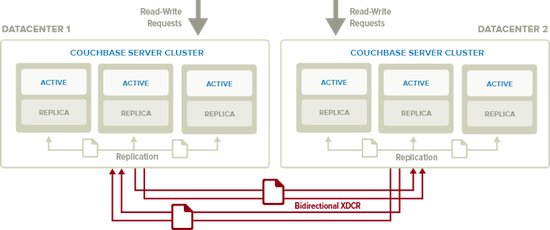

XDCR Architecture

Couchbase Server also easily replicates data from one cluster to another for allowing cross data-center replications, which are indispensable for disaster recovery or to bring data closer to users geographically diversified. Cross data-center replication and internal cluster replications occur simultaneously and even allows for the cluster to serve and write requests from any applications as well.

Hibernate Secondary Cache

We’ll finish up by introducing you to the first application of Couchbase that we’ll demo in our next post. Hibernate is the de-facto ORM solution in the Java world and now in the CFML world as well since the tight integrations that Adobe and Railo have built into their application servers. Hibernate uses in-process session memory to store entities during operation. This is cleared out though when the Hibernate session is flushed to the database. For better performance, Hibernate will allow you to use a secondary cache as an extra layer between the Hibernate session and the database. This removes load from the disk by keeping oft-used entity property values stored in the cache. If your application is spread across more than one application server, you can use an out-of-process cache that is shared between your servers for the most effective caching layer.

Adobe ColdFusion and Railo both allow for EHCache out-of-the-box to be used for a secondary cache, but it is limited to the RAM on one server as an in-process cache. In order to distribute it or replicate EHCache you have to modify the default configurations and add some of EHCache Terracota libraries. You also have to deal with Terracota's licensing model as their community version only allows one node to be used for free. Luckily for us, Hibernate is extensible and allows custom cache providers to be implemented for a cache engine of your choosing. Enter the Couchbase as a perfect Hibernate secondary cache!

In our next post, we’ll show how to get Couchbase connected to both Adobe ColdFusion and Railo as a distributed, highly available ORM cache that can be shared by your entire CF server farm thanks to Couchbase's dual nature of being able to use the Memcached binary protocol.

Read our next article in the series: Couchbase: Secondary ORM Configuration & Setup.

Add Your Comment

(1)

Aug 01, 2013 00:12:48 UTC

by Delinetciler

I like it. very helpful